SOComputing Published 2020-10-16 23:59:52

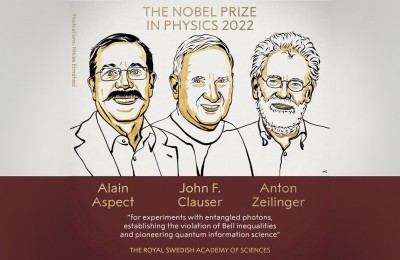

The INRC

Accelerating research and adoption of breakthrough AI systems. The Intel Neuromorphic Research Community (Intel NRC) is an ecosystem of academic groups, government labs, research institutions, and companies around the world working with Intel to further neuromorphic computing and develop innovative AI applications.

Leading Together.The Intel Neuromorphic Research Community (INRC) is a global network of more than 75 research groups who are committed to delivering on the promise of neuromorphic computing to make the technology a commercial reality.

Neuromorphic Computing

The first generation of AI was rules-based and emulated classical logic to draw reasoned conclusions within a specific, narrowly defined problem domain. It was well suited to monitoring processes and improving efficiency, for example. The second, current generation is largely concerned with sensing and perception, such as using deep-learning networks to analyze the contents of a video frame.

A coming next generation will extend AI into areas that correspond to human cognition, such as interpretation and autonomous adaptation. This is critical to overcoming the so-called “brittleness” of AI solutions based on neural network training and inference, which depend on literal, deterministic views of events that lack context and commonsense understanding. Next-generation AI must be able to address novel situations and abstraction to automate ordinary human activities.

Intel Labs is driving computer-science research that contributes to this third generation of AI. Key focus areas include neuromorphic computing, which is concerned with emulating the neural structure and operation of the human brain, as well as probabilistic computing, which creates algorithmic approaches to dealing with the uncertainty, ambiguity, and contradiction in the natural world.

Neuromorphic Computing Research Focus

The key challenges in neuromorphic research are matching a human's flexibility, and ability to learn from unstructured stimuli with the energy efficiency of the human brain. The computational building blocks within neuromorphic computing systems are logically analogous to neurons. Spiking neural networks (SNNs) are a novel model for arranging those elements to emulate natural neural networks that exist in biological brains.

Each “neuron” in the SNN can fire independently of the others, and doing so, it sends pulsed signals to other neurons in the network that directly change the electrical states of those neurons. By encoding information within the signals themselves and their timing, SNNs simulate natural learning processes by dynamically remapping the synapses between artificial neurons in response to stimuli.

Producing a Silicon Foundation for Brain-Inspired Computation

To provide functional systems for researchers to implement SNNs, Intel Labs designed Loihi, its fifth-generation self-learning neuromorphic research test chip, which was introduced in November 2017. This 128-core design is based on a specialized architecture that is optimized for SNN algorithms and fabricated on 14nm process technology. Loihi supports the operation of SNNs that do not need to be trained in the conventional manner of a convolutional neural network, for example. These networks also become more capable (“smarter”) over time.

The Loihi chip includes a total of some 130,000 neurons, each of which can communicate with thousands of others. Developers can access and manipulate on-chip resources programmatically by means of a learning engine that is embedded in each of the 128 cores. Because the hardware is optimized specifically for SNNs, it supports dramatically accelerated learning in unstructured environments for systems that require autonomous operation and continuous learning, with extremely low power consumption, plus high performance and capacity.

Intel Labs is committed to enabling the research community at large with access to test systems based on Loihi. Because the technology is still in a research phase (as opposed to production), there are only a limited number of Loihi-based test systems in existence; in order to expand access, Intel Labs has developed a cloud-based platform for research community access to scalable Loihi-based infrastructure..

Collaborating to Advance Neuromorphic Computing

Intel Labs has established the Intel Neuromorphic Research Community (INRC), a collaborative research effort that brings together teams from academic, government, and industry organizations around the world to overcome the wide-ranging challenges facing the field of neuromorphic computing. Members of the INRC receive access to Intel's Loihi research chip in support of their neuromorphic projects. Intel offers several forms of support to engaged members, including Loihi hardware, academic grants, early access to results, and invitations to community workshops. Membership is free and open to all qualified groups.

Probabilistic Computing Research Focus

The fundamental uncertainty and noise that are modulated into natural data are a key challenge for the advancement of AI. Algorithms must become adept at tasks based on natural data, which humans manage intuitively but computer systems have difficulty with.

Having the capability to understand and compute with uncertainties will enable intelligent applications in diverse AI domains. For example, in medical imaging, based on the uncertainty measures one can prioritize which images a radiologist needs to look at and show on the image regions highlighted with low uncertainty. In case of smart assistant at home, an agent can interact with the user by asking clarifying questions to get better understanding of a request when there is a high uncertainty in the intent recognition.

In the autonomous vehicles domain, the systems piloting autonomous cars have many tasks that are well suited to conventional computing, such as navigating along a GPS route and controlling speed. The current state of AI enables the systems to recognize and respond to their surroundings, such as avoiding collision with an unexpected pedestrian.

To advance those capabilities into the realm of fully autonomous driving, however, the algorithms must incorporate the type of expertise that humans develop as experienced drivers. The sensors like GPS, cameras, etc. exhibit uncertainty in their position estimates. Also the ball that children are playing with in a nearby yard could roll into the street and one of the kids may decide to chase it. It’s wise to be wary of an aggressive driver in the next lane. In these cycles of perception and response, both the inputs and the outputs carry a degree of uncertainty. The decision making in such scenarios depends on the perception and understanding of the environment to predict future events in order to decide on the correct course of action. The perception and understanding tasks need to be aware of the uncertainty inherent in such tasks.

Managing and Modeling Uncertainty

Probabilistic computing generally addresses problems of dealing with uncertainty, which is inherently built into natural data. There are two main ways the uncertainly plays a role in AI systems:

Uncertainty in perception and recognition of natural data. The contributing sources include input uncertainty arising from hardware sensors and environment, as well as the recognition model uncertainty because of the disparity in training data and the data being recognized.

Uncertainty in understating and predicting dynamic events. Human movement and intent prediction is one example where such uncertainty is exhibited. Any agent trying to predict such dynamic events needs to model human intent and understand the uncertainties in the model. Observations can then be used to continuously reduce the uncertainties for efficient intent & goal prediction.

Key problems in this area revolve around efficiently characterizing and quantifying uncertainty, incorporating that uncertainty into computations and outcomes, and storing a model of those interacting uncertainties with the corresponding data.

One implication of the fact that outputs are expressed as probabilities, rather than deterministic values, is that all conclusions are tentative and associated with specific degrees of confidence. To extend the autonomous driving example above, the children’s ball disappearing from view or increasingly erratic behavior by the aggressive driver might increase confidence that such a potential hazard will require a response.

In addition to enabling intuition and prediction in AI, probabilistic methods can also be used to impart a degree of transparency to existing AI recognition systems that tend to operate as a black box. For example, today’s Deep Learning engines output a result without a measure of uncertainty. Probabilistic methods can augment such engines to output a principled uncertainty estimate along with the result making it possible for an application to decide the reliability of the prediction. Making uncertainty visible helps to establish trust in the AI system’s confidence in decision making.

Whereas deterministic processes have predictable, repeatable outcomes, probabilistic ones do not, because of random influences that cannot be known or measured. This process of incorporating the noise, uncertainties, and contradictions of natural data is a vital aspect of building computers capable of human (or super-human) levels of understanding, prediction, and decision-making. This work builds on prior applications of randomness in data analysis, such as the well-established use of Monte Carlo algorithms to model probability.

Enabling a Probabilistic Computing Ecosystem

In addition to its main thrust—dealing with incomplete, uncertain data—probabilistic computing depends for its success on being integrated collaboratively and holistically into the broader universe of computing technology. Intel Labs is helping to build the necessary bridges across entities in academia and industry through the Intel Strategic Research Alliance for Probabilistic Computing.

This research initiative is dedicated to advancing probabilistic computing from the lab to reality, by integrating probability and randomness into fundamental hardware and software building blocks. Drawing together and enabling research in these areas, the Alliance works toward engineering the capacities for perception and judgment to enable next-generation AI.

Loihi: A Neuromorphic Manycore Processor with On-Chip Learning

References

https://www.intel.com/content/www/us/en/research/neuromorphic-computing.html.